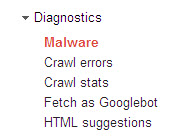

Google’s Webmaster Tools provides a set of diagnostics that enable you to make necessary repairs to your website. The information covers issues like the presence of Malware, duplicate pages, missing links (404 errors) and suggestions re improvement in HTML. So it seems the Google Bots are not so lazy after all!

Some of the information provided by Webmaster Tools gets very technical, so you may want to get your Webmaster to have a look at the diagnosis (or get a knowledgeable friend or family member to do so). However, you should be able to act on some of the information yourself and I will confine myself to a couple of these aspects.

Identifying and removing Malware

Webmaster Tools, as indicated in the above image, provides information about Malware that is affecting your website. According to Malwarebytes, Malware represents 97% of all online threats. Malware enables the perpetrator to steal your personal information (passwords, logins, etc) and disrupt your computer. Malware includes computer invasions such as ‘viruses, worms, trojans, rootkits, dialers and spyware’. Your Webmaster Tools will identify the threats and give you links for advice on how to remove these threats. A better way to go is to be proactive and use a program that will detect, prevent and destroy malware. The Anti-Malware program that I use and that is recommended by many technical experts (100 million downloads worldwide) is Malwarebytes.

If you take the opportunity to protect your website with an anti-malware program, then you will see the following message from Google’s Webmaster Tools (reported about my Small Business Odyssey site):

Google has not detected any malware on this site.

Clearing out duplicate webpages

The Webmaster Tools also advise other problems with your website that can be readily rectified. One of these is where you have duplicate pages on your website. Sometimes, you may have created a new version of an old page and accidentally left the old version on the website, resulting in duplication of the page. This happened recently with the website for my own human resources business. Google’s Webmaster Tools will advise you of this duplication under the ‘HTML suggestions’ menu item (see image above). The duplicate pages I identified were listed under the sub-menu item, ‘Pages with duplicate meta descriptions’ (in other words, with substantially the same title).

Dealing with 404 errors – page not found

These ‘page not found’ errors (404) are reported under ‘Crawl errors’ (see image above – it means you’ve upset the Google Bots because they can’t find the webpage that was supposed to be present). 404 errors can occur because you have removed a page which is still showing in Google’s version of your sitemap or because the page you linked to no longer exists for some reason. The Google Bots try to follow the links on your site and when they see a deadend, they report it as a 404 error.

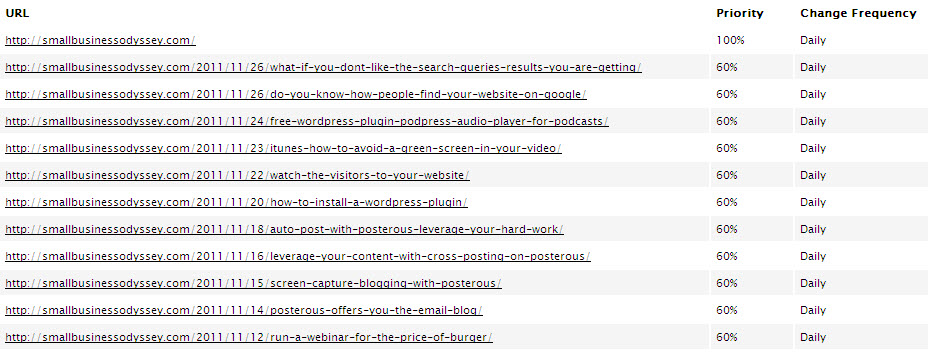

I recently found a series of 404 errors on the website for my human resource consultancy business as reported in Webmaster Tools. These errors resulted from the fact that we had changed the default ‘permalink’ for the titles of our blog posts (changed from an auto-generated number to the title of our blog posts). The result was the following type of errors reported in Webmaster Tools under ‘Crawl errors”:

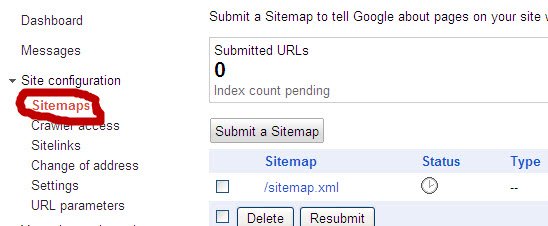

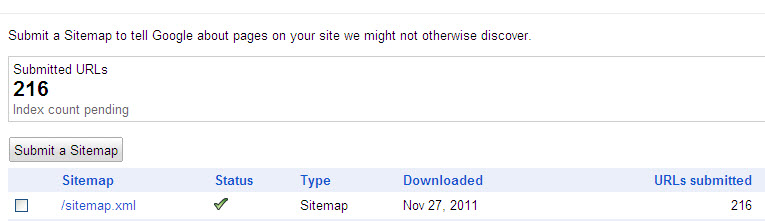

Where possible, you should clean up broken links and, where appropriate, resubmit your sitemap so that Google has a more up-to-date version to follow.

Through its Webmaster Tools, Google gives you a lot of information to help you to keep your website functioning properly and to make it easy for the Google Bots to index your website effectively.