Let’s talk about Squidoo and its relationship with Google. Google and Squidoo have some goals in common. For example, they both want original content on websites (lenses) that are frequently updated. They both want quality and not spam. They each want to see the searcher satisfied when they use their respective platforms. One of the goals of the founders of Squidoo, for example, was to create a quality search engine.

Squidoo and Google also have some differences. While both want to generate a profit, one of Seth Godin’s goals in creating Squidoo was to generate $100 Million for charity – hence, the option for Lensmasters to donate their advertising revenue to approved Squidoo charities.

The Professional relationship between Squidoo and Google

Some time past, Seth Godin provided strategic advice on a consultancy basis to Google. As one of the world’s leading Internet marketers, Seth was well placed to do this. He has a million readers a day for his blog, Seth Godin’s Blog. He has also written 10 New York Bestsellers on the topic of Internet Marketing.

However, the relationship is on a professional basis. When Squidoo was attacked by a lot of spammers, Google had no hesitation in downgrading Squidoo to protect their own revenue base derived from quality search results. You can read about this ‘Google Slap’ story on my Squidoo Marketing Strategies lens and see way Squidoo responded to improve its overall quality.

The relationship is back on a sound footing with Google re-establishing Squidoo’s high ranking and value in search results.

How can you help the relationship between Google and Squidoo?

Fundamentally, you contribute positively to this relationship when you create quality lenses that have original content and are updated on a regular basis. When you join Squidoo you effectively sign up to the Originality Pact which I discussed in an earlier post.

The challenge is to maintain the quality and currency of your Squidoo lenses in the face of many other time consuming tasks. This is a tall order if you have a lot of Squidoo lenses. Developing techniques to improve your online productivity can help here. You might like to visit my lens on improving personal productivity for some ideas.

The reward of Squidoo’s relationship with Google

The core benefit from this relationship is that Google values quality Squidoo lenses highly. So once you create a quality lens and update it on a regular basis, you will be rewarded with an improved page rank, higher positioning in search engine results and improved web traffic. I have found that it is much easier to achieve a high page rank from Google with a Squidoo lens than it is with a blog or a website.

It has also been my anecdotal experience too that, since I became a Giant Squid, I have been indexed more quickly by Google.

One of the interesting things is that Google accords each Squidoo lens a page rank different from that of the Squidoo platform itself. Here’s some examples of my lenses:

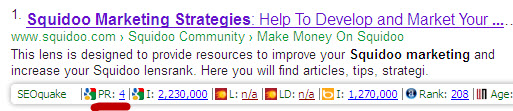

A. Keyword: squidoo marketing strategies

Lens: http://www.squidoo.com/squidoomarketingstrategies

Google Results: Position 1

Google Page Rank: 4

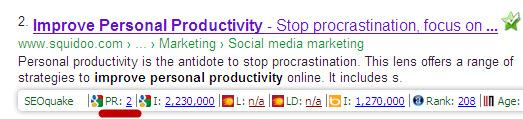

B. Keyword: improve personal productivity

Lens: http://www.squidoo.com/improve-personal-productivity

Google Results: Position 2

Google Page Rank: 2

C. Keyword: tropical rainforest walk

Lens: http://www.squidoo.com/tropicalrainforest

Google Results: Position 7

Google Page Rank: 3

These results come from screenshots taken of Google search results with the added information provided by SEO Quake – a free plugin & toolbar for use with Mozilla Firefox or Google Chrome.

The quality of the relationship between Squidoo and Google is dependent on the quality and originality of Squidoo lenses – if you create excellent Squidoo lenses you can expect good page rank and high positions in Google’s search results for targeted keywords.